This post is the second of a series of three. The goal is to embed a neural network into a real time web application for image classification. In this third part, I will go through a small, yet deployable application built with the Spring Framework. Furthermore, I will elaborate on how I used Apache Kafka to put it all together and embed the micro service introduced in part 2 in my client-server environment.

Architecture Overview

In the first post of this series of three Model Stack (Part 1) I demonstrated how one could use the pre-trained model InceptionV3 to create a small stack that can predict if there is a cat or a dog on an image with an accuracy of over 99%. In the second post Kafka Micro Service the predictive power of the classification stack is encapsulated in a micro service that can be accessed through the Apache Kafka event bus. In this last post of this series I will leave the newly born realm of AI and machine learning realm to enter once again the already mature universe of software engineering for the web. However, I do not intend to go into great detail on how Apache Kafka or Web Sockets work. Neither will I post the complete code here, as you can directly check it out from the git repo: catdog-realtime-classification-webapp, build it and run it within a few minutes. The goal here is to only give you the general idea how the micro service and the web application interact.

Apache Kafka

If you look at the official website of Apache Kafka you will find the following statement: “Kafka is used for building real-time data pipelines and streaming apps. It is horizontally scalable, fault-tolerant, wicked fast, and runs in production in thousands of companies.” The reason I chose Kafka for this demo is namely the “real-time data pipelines” capability. Horizontal scalability is a “must” in the domain of web and business applications. There are several other promising technologies, e.g. Vert.x and Akka. Unfortunately the current version of Vert.x (c.3.4.1) does not have a python client. Akka is native to the JVM and officially supports only Java and Scala. Next to the real-time data pipeline and very easy to handle programmatic interfaces for both java and python, there are numerous “plug & play” docker containers to choose from in GitHub. Hence, for my demo I created a fork of kafka-docker by wurstmeister. You can checkout my fork here: kafka-docker. As mentioned in the README.md one has to only install docker-compose and call “docker-compose -f docker-compose-single-broker.yml up” to get kafka up and running.

UI with Web Sockets

The user interface of the web application is very simple. It is slightly modified version of the JavaScript client shipped with the Spring web sockets example: Using WebSocket to build an interactive web application.

The client side logic is kept to the minimum. There is no angular2, the only libraries used are jquery, sockjs and stomp. I can only recommend this very nice written tutorial on sockets and stomp. Anyway, the client binds to the “catdog-websocket” and subscribes to the “topic/catdog”:

function connect() {

var socket = new SockJS('/catdog-websocket');

stompClient = Stomp.over(socket);

stompClient.connect({}, function (frame) {

setConnected(true);

console.log('Connected: ' + frame);

stompClient.subscribe('/topic/catdog', function (message) {

showCatDogDTO(message);

});

});

}If a message is received the client handles its content and modifies the HTML layout by appending new rows to the table:

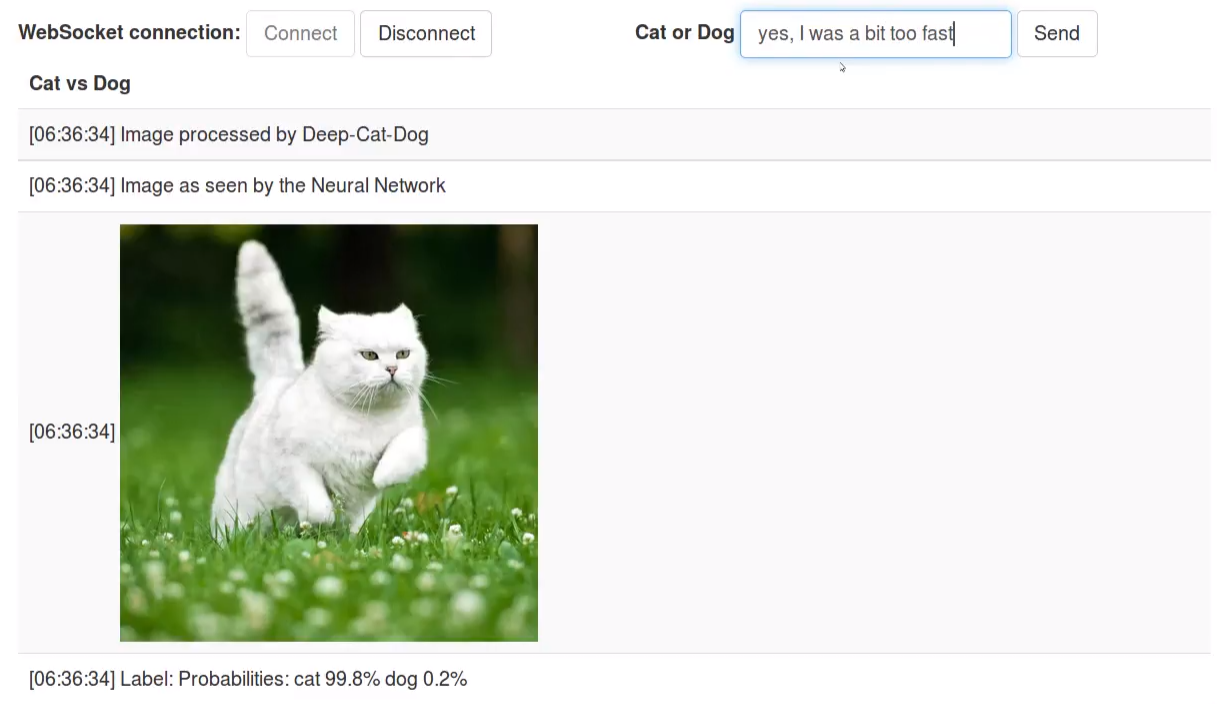

function showCatDogDTO(unparsed_message) {

time = get_time()

preffix = "<tr><td>[" + time +"] "

suffix = "</td></tr>"

message = JSON.parse(unparsed_message.body)

if (message.resolved) {

$("##catdogboard").prepend(

preffix + "Image processed by Deep-Cat-Dog" + suffix +

preffix + "Image as seen by the Neural Network</td></tr>" + suffix +

preffix + "<img (...) src=\"" + message.url + "\"/>" + suffix +

preffix + "Label: " + message.label + suffix);

} else {

$("##catdogboard").prepend(preffix + message.content + suffix);

}

}Kafka Message Producer

Whenever the user sends a message through the input form, a spring controller checks if it contains an URL to an image. If so the image is downloaded and converted to png. The KafkaMessageProducer is responsible for sending the message to Kafka’s event bus. It’s amazing how few lines of code are needed to create a Kafka Message Producer that does the job.

@Component

public class KafkaMessageProducer {

private final Logger logger = LoggerFactory.getLogger(this.getClass());

private static final String BROKER_ADDRESS = "localhost:9092";

private static final String KAFKA_IMAGE_TOPIC = "catdogimage";

private Producer<String, String> kafkaProducer;

@PostConstruct

private void initialize() {

Properties properties = new Properties();

properties.put("bootstrap.servers", BROKER_ADDRESS);

properties.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

properties.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

kafkaProducer = new KafkaProducer<>(properties);

}

public void publish(CatDogKafkaDTO catDogEvent) {

logger.info("Publish image with url " + catDogEvent.getUrl());

String jsonValue;

try {

jsonValue = new ObjectMapper().writeValueAsString(catDogEvent);

} catch (JsonProcessingException ex) {

throw new IllegalStateException(ex);

}

this.kafkaProducer.send(new ProducerRecord<String, String>(KAFKA_IMAGE_TOPIC, jsonValue));

}

@PreDestroy

public void close() {

this.kafkaProducer.close();

}

}The publish call is non-blocking. The event is received on the other side and the image is classified with InceptionV3 and xgboost classifier trained in part one: Cat vs Dog Real-time classification: Model Stack (Part 1)

Kafka Message Consumer

Eventually, the python script will send an event containing the classification message. For more details on how this script works, check out part 2: Cat vs Dog Real-time classification: Kafka Micro Service (Part 2) The event will be received and handled by a spring the spring component bellow:

@PostConstruct

public void initilize() {

logger.info("initilize");

kafkaConsumer = new KafkaConsumer<>(properties);

kafkaConsumer.subscribe(Arrays.asList(KAFKA_LABEL_TOPIC));

}

@Scheduled(fixedRate = 100)

public void consume() {

ConsumerRecords<String, String> records = kafkaConsumer.poll(0L);

Iterator<ConsumerRecord<String, String>> iterator = records.iterator();

while(iterator.hasNext()){

ConsumerRecord<String, String> consumerRecord = iterator.next();

safelyProcessRecord(consumerRecord);

}

}

}The consume method gets triggered every 100 milliseconds and polls for a Kafka message. If there is an event, the consumer has not handled yet, it gets processed and another event is send to the browser client. The showCatDogDTO javascript funciton shown above gets called and the data gets written on the HTML page.

The real-time cat / dog classifier

For the sake of simplicity I commented only a small part of the web application code. Be sure to check out the git repo, build it and run it: catdog-realtime-classification-webapp Or you can watch the youtube video below to get a taste on how everything works and feels like:

As tech is further advancing it gets increasingly easier to build real-time smart applications. Data scientists can script micro services that are bound together with Apache Kafka or similar real-time data pipeline. This way one can skip or significantly reduce long production cycles, where prototypes written by data scientists are recoded in Java and directly embedded in monolithic business applications. Real-time pipelines give you so much flexibility, you can change scripts in production dynamically, and test these on a small sample of users at first. The future is surely going to be amazing.